Gina Gotthilf, VP of Growth at language education platform Duolingo, is reluctant to spill one of her favorite A/B tests, but it’s too good not to share. “You know that little red dot on your app icon that indicates there’s something new, or something unresolved? That led to a six percent increase in our DAUs. It was six lines of code. I think it took about twenty minutes. And then our V2 for the dot brought an additional 1.6% increase in DAUs,” she says.

There are a lot of lessons packed into that anecdote: You can’t predict what’s going to move the needle. Optimizing a product is always a mix of the grand and the granular. But it’s the fact that Gotthilf has these stats at her fingertips that’s most telling. A master growth marketer, she knows the most important lesson of all: anything you send out to your users — even a dot — yields valuable data. So test it all, internalize every number, and use those results to inform what you do next.

By assiduously testing every notification, app screen and line of copy — Gotthilf’s team alone is always running at least five A/B tests — she has overseen Duolingo’s growth as the company’s exploded from 3 million to 200 million users.

In this exclusive interview, Gotthilf examines the four A/B tests that have been most crucial to that growth. She shares the lessons and cautionary tales she’s gathered from each experiment — and the tenets born from them. Let’s begin.

Four A/B Tests To Move Forward On

We asked Gotthilf to walk us through four of the most formative A/B tests Duolingo has run, with the help of her team and Product Manager Kai Loh. Here’s the context and calculus that she shared — including the tactics that even the leanest marketing team can start implementing today.

A/B TEST #1: Delayed Sign-Up

THE QUESTION: Several years ago, Duolingo began tackling perhaps the most existential question for an app startup: what was causing the leak at the top of their funnel — and how could they stop it?

“We were seeing a huge drop-off in the number of people who were visiting Duolingo or downloading the app and signing up. And it's obviously really important for people to sign up — it suggests they're going to come back and, second, signals our opportunity to turn one visit into a rewarding, continuous experience,” says Gotthilf. “That means we can send them notifications and emails. So we started thinking about what we could do to improve that.”

They posited that the fix might be counterintuitive: While it seems like you should ask users to sign up immediately, when you have the greatest number of interested users and their attention, perhaps letting people sample your product first is the most powerful pitch.

THE TESTS: “We found that by allowing users to experience Duolingo without signing up — do a lesson, see the set of skills that you can run through — we could increase those sign-up metrics significantly,” says Gotthilf. “Simply moving the sign-up screen back a few steps led to about a 20% increase in DAUs. The first foray into delayed sign-ups was before my time, but we’ve since seen a positive impact.”

Since iteration is paramount in Gotthilf’s growth org, they didn’t leave it at that. The first test had made a significant impact, and now it was time to refine it. They asked themselves: When should they ask users to sign up? In the middle of a lesson? At the end? And how should they do it?

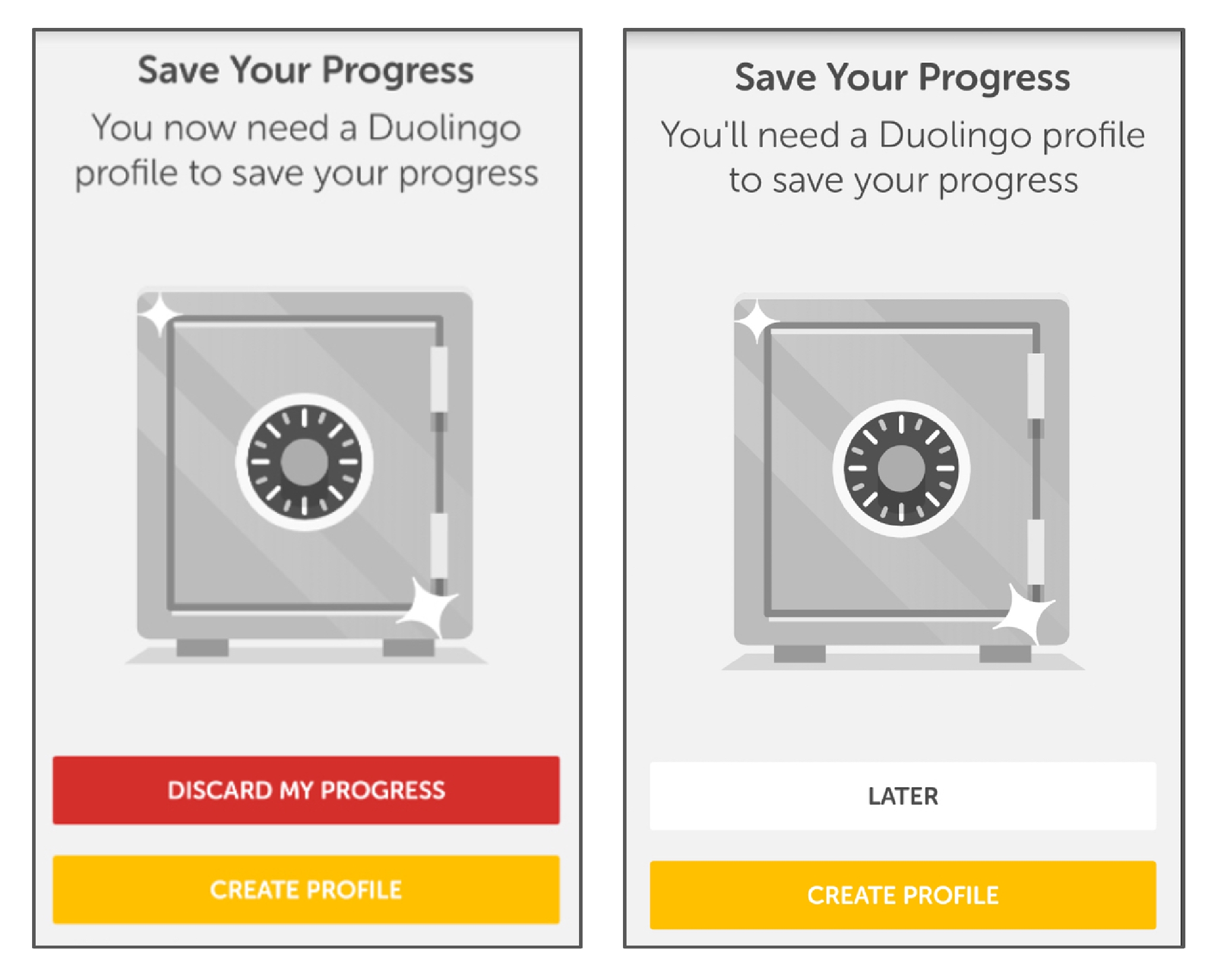

They looked at the design of the page itself, too. “At one point we thought, ‘Okay, we're moving this screen around. What else can we do?’” says Gotthilf. “There was a big red button at the bottom of the screen that said ‘Discard my progress’ — basically meaning ‘Don’t sign up.’” They suspected that many people were conditioned to simply hit the most prominent button on a screen without thinking about it — and that Duolingo was losing still-interested users. Sure enough, swapping that design for a subtle button that simply read “Later” moved the needle.

That change introduced “soft walls” — that is, optional pages that ask users to sign up, but allow them to keep going by hitting “Later” — and opened the door to a new arena for experimentation. “We have three of those soft walls now,” says Gotthilf. “Finally, there’s a hard wall, after several lessons, that basically says if you want to move forward, you have to sign up. Here’s what’s key: without those soft walls priming a sign-up as they’re ignored, those hard walls perform significantly worse.”

Together, these subsequent changes to delayed sign-up — optimizing hard and soft walls — have yielded an 8.2% increase in DAUs. “This happened about three years after the original test, so our user base was much larger. That number was extremely significant.”

THE TAKEAWAY: Test early users to determine if signing up before using your product or after is better. If the latter, form a hypothesis on the following: what is the moment when users will discover the value of your product? Estimate that point in your sign-up funnel and try a soft wall right after it. (Gotthilf recommends doing it as early as possible because, at every step, you have the potential to lose users.) Then another soft wall after a few instances of engagement. Then try a hard wall and analyze the leaks at each wall. Move walls forward or back accordingly.

Think of soft walls as lining up dominoes. Order them correctly and one push creates momentum toward a sign-up.

A/B TEST #2: Streaks

THE QUESTION: Duolingo, like many other apps, wants to encourage users to develop a regular behavior — in this case, completing a language lesson every day. In fact, the product essentially doesn’t work unless a user visits regularly. “Over my life, I’ve studied four languages — and can speak five. One of my takeaways is that there’s no way one can learn a language on just Saturdays or Sundays,” says Gotthilf. “We need to get people to do it every day or every other day for languages to stick.”

But that’s easier said than done. “With all the MOOCs and educational platforms out there, there are a lot of ways to learn awesome things online,” says Gotthilf. “People get really excited about signing up, but those services have abysmal retention rates. Because getting people to come back is really hard.”

That’s why the Duolingo team looked for inspiration in an industry outside the education space: the world of gaming. “In order to consider Duolingo as a game, we basically had to find ways to make things more fun,” says Gotthilf. “We’d look for examples from games that we really liked and say, ‘If we were to transpose this into Duolingo, what would that look like?’”

For Duolingo, streaks — keeping track of how many days in a row a user has used the product — have been a game changer. And a metric that Gotthilf and her team continues to focus on. “It's like in a video game, where you have to do something every day or you lose your rank,” she says.

Streaks reward — and gamify — persistence.

THE TEST: The introduction of the Duolingo streak marked a major turning point in how users interacted with the app — and how Gotthilf and her team could respond.

For starters, a streak requires an established goal, so the team added goal-setting to the account setup process. “You determine how much Duolingo you want to do per day,” says Gotthilf. “If you don't hit your goal, you can't keep your streak. It’s not just about opening the app every day.”

Goals, in turn, gave the growth team a meaty new way to think about notifications. “Now we actually have a reason to tell you to come back. It’s not just ‘Come back to Duolingo,’ which is super spammy and annoying. We can say, ‘You have a goal. Reach your goal today.’ Or ‘Time to do your daily lesson.’ That kind of prompt is good. You can come from a place of helping the user maintain her own goal.”

They played with a number of factors, starting with when, and how frequently, people were pinged. Timing can make or break any notification. Get one when you can’t do anything about it, and it’s a missed opportunity — or, worse, an annoyance. “If you’re at work and you get a notification about something fun, or you’re about to go to sleep, you're probably going to ignore it,” says Gotthilf. “There are specific times of day that are better for getting certain types of notifications, and that depends a lot on what kind of app you are.”

Of course, there’s no one-size-fits-all timing, and Duolingo is communicating with users all over the world, too. They ran a number of tests, and ultimately landed on the metric that’s been in place ever since: 23.5 hours. “We assume that whenever you used our app was a good time for you to use the app that day. So we assume that it might be a good time for you to use the app the next day too. We want to help our users create a habit. We want to make it like brushing your teeth.”

Notification content was ripe for testing, too. “We optimized the copy, from funny to something more imperative. We tried using examples of words you’re going to learn. We tried using Duo, our owl mascot, and making it cute and personalized. We tried a quiz right in the notification, and you have to answer it.” The winner, incidentally, was the most personalized version — the “Hi, it’s Duo” message.

It took some time to work through various approaches, optimizing the copy with each new test, but it was time well spent. “It's really worth optimizing factors like this, which affect a very large portion of your users,” says Gotthilf.

Worth it, indeed—the winning copy alone lead to a 5% increase in DAUs.

It turns out, though, that streaks can be a double-edged sword. Users grew so invested that losing a streak was demoralizing — and led some people to quit entirely. But in that unplanned outcome, Gotthilf and her team just saw more room for experimentation. Duolingo had developed a currency of sorts — earned by successfully completing lessons — so they played around with new ways to use it.

“The first thing we tried was the Weekend Amulet. You can buy it only on Fridays — now we surface it when you finish a lesson on Friday — to protect your streak if you don't practice on Saturday and Sunday,” says Gotthilf. “The idea was to get ahead of users who would fall off on those lowest-usage weekend days, and then never come back. It helps their learning, because in one situation they're giving up and the other one they're not.”

The amulet was a success — retention went up 2.1% and 4% D7 and D14 (seven and 14 days later, respectively) — but that wasn’t the end of the test. The analysis of A/B testing shouldn’t be confined to increases of one metric, but measured against competing goals. In this case, Duolingo ran a test to see if retention and monetization were at odds.

“Though it increased DAUs, we thought the Weekend Amulet might hurt monetization because we have something called streak repair, which people pay for,” says Gotthilf. “We ran an experiment and discovered that the value of the increase of DAUs surpassed any lost revenue from streak repair. It’s always a must to juxtapose increasing DAUs and retention with monetization goals.”

THE TAKEAWAY: Most apps have a habit they’re trying to instill. When possible, have your users set goals, such as a frequency for writing, sleep or keeping in touch with friends, from the start. In other words, help them define their own expectations of usage from the onset. It’s less important that it’s spot-on, and more critical that it’s user-determined. This helps open the door to smarter notifications. Double-down by pinging them at the time of day that’s just shy of 24x hours since they last used you.

Lastly, you may find yourself following a string of tests as one concept gives rise to another (then another). Follow it — those spinoff tests can often amplify each gain dramatically. But remember that you can’t take yourself out of big decisions entirely. “When it comes to decisions that compromise one metric but help another, as in the case of the Weekend Amulet — or ethical decisions or brand-perception decisions — you can run tests to gather data,” says Gotthilf. “But at the end of the day, sometimes you do have to make a human decision.”

%20(1).jpg)

A/B TEST #3: Badges

THE QUESTION: The addition of badges to Duolingo has become one of Gotthilf’s favorite projects, though it wasn’t at first. She had wanted to test it for years, but was cautious of its return due to the high investment it’d require. But her team advocated for it, and it’s become a gamble that’s really paid off. Their hunch? Users would complete more lessons, more often, if they were rewarded for meaningful accomplishments.

That hunch was right, in a big way. Since rolling out V1 alone, they’ve seen a 2.4% increase in DAUs. “Session starts — how often people begin a lesson — increased by 4.1% and session ends — the number of lessons users are completing — have increased by 4.5%,” she says. “So that tells us that people are not only starting more lessons, but they're also finishing lessons they would have given up on because they want to get a badge.”

There were unexpected boosts, too. A 13% increase in items purchased in the Duolingo store, for example. And a 116% increase in friends added. “People suddenly think, ‘Ooh, badges. I want to compete with my friends. So they add friends to their profile, which is something they weren't doing before. And now that we're starting to think about social and referrals, it's really valuable to us,” says Gotthilf.

Badges were an all-around triumph. So it might surprise you to learn that the experiment almost didn’t happen, derailed by a previous failed attempt. “The first time we tried to do anything with badges and achievements was a year ago. We wanted to test the minimum viable product, so we basically gave users a badge that said ‘You signed up.’ It had no effect on anything, so we killed it.”

It wasn’t until the post-mortem, though, that the team walked through the experience and realized that a sign-up badge didn’t capture the spirit of achievement at all. Here were the key lessons:

- Signing up is rarely an achievement that instills pride.

- Getting one badge doesn't matter; what matters is having several badges.

- What people want is to see their collection of badges.

It’s one of the crucial lessons of A/B testing:

The simplest way to implement an idea effectively may not be the simplest way to implement that idea. “Minimum” comes first in “MVP,” but “viable” is at its core.

THE TEST: The next time around, the team developed a much more robust experience. “We took the time to design several badges, and to find a place in the app where these badges would live. We thought about the most rewarding parts of Duolingo, the areas where users could feel the most positive.”

Past experiences aside, though, it was still the V1 of a new test, and the team needed to limit themselves (they’ve since rolled out a V2, that has tackled more complexity, such as tiers of badges). As is often the case with weighty, creative experiments, that meant pumping the breaks. Scope expands as people get excited. “There were upward of 70 badge ideas on the table. Some people wanted to build a second, third, fourth tier of badges. Others wanted to create a system where users could see their friends’ badges,” says Gotthilf. “I have to be the person who says, ‘This is too complicated. We need to scale back.’ One way that we’ve found to stay in bounds is to have either a team manager or a PM sit with the designer and do a design sprint. This has been a recent development — and an important one.”

Product managers are typically very close to the metrics. They understand what you’re trying to accomplish, and also know where designers and engineers are spending their time. “When you get those key players together, they can sit down and level. Conversations commonly include: ‘Okay, this we can probably do without. That we could probably do without.’”

The process becomes a peer-to-peer negotiation, not a series of top-down decrees. “I’ve found that's the most effective way to get things done and keep people’s motivation up. The best outcome is when you're able to get the people in the room to arrive at the conclusion that within a stone’s throw of what you had hoped. And even better it’s their idea they’re now championing — even if it resembles yours.”

THE TAKEAWAY: Successful testing demands balance: a minimum viable product that is neither a toe in the water nor a full plunge: not a shabby rendering of an idea nor its most robust implementation. For first features with promise or eager second attempts such as Duolingo’s badges, try pairing PMs or team managers with designers in early design sprints. The two functions serve as counterbalances, helping sustain the right levels of enthusiasm and execution. If it falters and flops, don’t lose focus or steam in the post-mortem. There are footholds for the next experiment littered throughout.

A/B Test #4: The In-App Coach

THE QUESTION: Sometimes inspiration comes from external sources. Several members of the team had been reading behavioral psychology studies that suggested it was more powerful to encourage people based on their ability to improve their skills rather than their natural intelligence.

“It’s the idea that telling your kids, ‘You did so well on your test because you studied hard’ is much better than saying, ‘You did so well on your test because you're a genius,’” says Gotthilf. The team decided to pursue this idea — that agency over a difficult task is motivating — by creating an encouraging in-app coach.

THE TEST: Enter Duo, Duolingo’s supportive owl mascot, who now doubles as a coach. “Sometimes you're doing a very hard language. I just started learning Japanese. I make a lot of mistakes,’” says Gotthilf. “And when you make a lot of mistakes, you feel bad. You feel like you're failing. And you're prone to giving up. So we have this friendly character pop up on the side and say things like, ‘Even when you're making mistakes, you're learning.’”

He’s there when users are doing well, too. “We tested ‘Whoa, you’re amazing’ copy against growth-mindset copy. Both copies beat out the control, but the growth-mindset copy won overall. So we went with messages like: ‘Wow, your hard work is really paying off.’”

Duo’s encouragement is somewhat controversial among the Duolingo team. “Some people dislike it and find it annoying,” says Gotthilf. Still, the results speak for themselves: They saw a 7.2% increase in D14. “So 14 days later, we’re getting 7.2% more people coming back to Duolingo because we added this language and cheerleading owl in the lesson. It's crazy surprising. I'll keep those results.”

THE TAKEAWAY: Sometimes, you have to put your personal tastes — and especially your assumptions — aside. In this case, the in-app coach did surprisingly well, moving the needle almost as much as the long-desired badges project. “Other times, you might be really excited about an idea and think it's going to make all the difference in the world. Then you A/B test it, realize it's not doing anything you thought it would, and have to move on.”

The Tenets of Testing

These are just four of the more than 70 types of tests that Gotthilf and her team have run. Over the course of her career, Gotthilf has arrived at a few cherished principles that consistently serve her well—whether the test requires six lines of code or six weeks of all-hands-on-deck hustle.

Rake in and rank continuously.

If you feel like there are infinite tests you could run, you’re right. That’s also the moment you most need to limit yourself. “That’s the hardest part of A/B testing,” says Gotthilf. “Everyone has a different opinion of what we should do, so prioritizing is really crucial to saving our team time and grief.”

They don’t edit at the brainstorming stage, though. Any idea for a test — from anywhere in the company — is added to a master list in JIRA. “We have a zillion ideas. Probably seventy-five percent of them are pretty crap,” says Gotthilf. “But anytime someone goes to a conference, has an inspiring conversation, or we do a brainstorming session, we keep adding to the list.”

Then, about twice a quarter (“not often enough!”), Gotthilf and her team comb through the list for new testing fodder. When it comes to choosing one, they employ a simple tool at every marketer’s disposal: ROI. With two key questions, you can make an informed guess about the return of any test you consider:

1. How many people will be affected by this change?

Otherwise put, where is this change in your funnel? In the case of Duolingo, for example, a change to the sign-up page — which most users see — touches more people than a change to a page buried deep in a language lesson. They also consider which languages will be affected, and which operating system.

“Consider those areas, and you’ll have an idea of how many people you can touch by making a change. Then ask yourself, ‘Okay, so if this test is successful, how much can we move this needle?’” says Gotthilf. “Our guideline is one percent. If something improves our numbers by at least one percent, that's when we consider launching. Otherwise, we don't.”

Of course, one percent for Duolingo — a mature company with 200 million users around the world — is not the same as one percent for an early-stage startup. Gotthilf rarely thinks in terms of hard numbers, but she does offer one rule of thumb: 100,000.

“Statistically significant results are key. Roughly speaking, you should have at least 100,000 DAUs to run an A/B test.”

Beyond hitting that statistical significance, though, she’s all about percentages. “For new apps, you should focus on A/B tests that bring a 20–30% increase in your metrics,” says Gotthilf. Over time, that figure will change, and you’ll notice that even your most successful tests are improving results by 20%, then 15%, then 10%. And that’s okay; you can move your baseline as you grow. “The important point is to have a baseline, so you’re not pushing out just anything.”

It’s not an exact science. You’ll need to determine what metrics best measure your success (for Duolingo, that’s daily active users) and learn your own usage patterns. “As you examine each stage of your funnel, you’ll start to get a sense of how many users you’re dealing with — and where the leaks in your bucket are. You’ll also pick up certain benchmarks in your industry and develop your own intuition.”

2. How many hours will go into running the test?

Next, you’ll need to gauge your own team’s time, and the resources you’ll need to devote to get a test off the ground. Do you think you can get the same return on one test, but at half the engineering investment of another? Well then, that’s probably your winner—tackle that one next.

Refine and repeat.

“There’s a lot of juice to be squeezed out of any big test,” says Gotthilf. Take Duolingo’s addition of achievement badges: Adding them yielded a huge bump in DAUs, so the team turned their attention to making them better. “Now we can ask: what other badges do we want to have? How should they interact with other parts of Duolingo? It opens the door for a number of new tests and ways to measure user behavior.”

People will always be most excited by the big ideas, the chance to dramatically boost a company’s numbers with a fresh new look or a game-changing feature. But the reality is that only by combining those with smaller, sometimes barely perceptible, changes is what keep the needle moving.

“You have to rein people in and engage them in working on the boring, tiny iterations that are maybe going to get you one or two percent,” says Gotthilf. “Reaching your goal takes leaps and bounds, and consistent long-term growth, too. It’s crucial to have a diverse portfolio of big bets and small, more surefire bets.”

How to stay on track as you hone your A/B test strategy?

- Design with restraint. It’s easy to get so caught up in a testing ethos that you want to try every possible implementation of an idea. “You could do seventy designs of your home screen and just run that. But it’s very difficult to get statistically significant results and not make mistakes in your analysis when you do that,” says Gotthilf. “So, stick to no more than three arms per experiment: a control and two test conditions. If we realize we want to test something else, then we'll have to wait to run that afterward.”

- Stand firm on what you stand for. There are some tests that shouldn’t be run. Not because they won’t be successful, but because even if they are, you’d have to abandon your company or brand principles to implement them. “At Duolingo, design is sacred. I recall experimenting with the design of the app’s sign-up page. The winning version featured a smiling cartoon girl. Trouble was, that character didn’t exist anywhere else, and our design team let marketing know that it was off-brand,” says Gotthilf, noting that this was before the company’s practice of a formal design review process. “In the end, we didn’t abandon the cartoon, but continue to run tests so we can meet our design guidelines and reach that same number of users. I’ll admit, it can be frustrating to spend more time on these designs, but pushing toward standards is vital in an experimentation-minded organization.”

We run 5-8 experiments at a time, but elsewhere in the company we’re running 20, 30, 80 tests simultaneously. It's easy to slip imperceptibly with each experiment. There must be areas where you won’t compromise.

Bringing It All Together

The value of A/B testing is well known. To earn — and defend — an edge, the best technology companies continuously test all aspects of their product. When it comes to A/B testing, don’t tarry. Experiment with the sequence of soft and hard walls before sign-up. Give users extra opportunities to maintain habits — whether that’s by gamifying streaks or providing notification in line with user-defined goals. Beware falling prey to the “minimal” part of MVP, at the expense of the “viable” component when testing. Embrace behavioral psychology to craft messages that promote a growth mindset. These tips, alongside Gotthilf’s hard-earned tenets on A/B testing can help any growth leader test their way onward and upward.

“We A/B test nearly everything and focus on whatever produces results. Whatever doesn't, we don't. It’s a very straightforward way of looking at things. Instead of leaving decisions to opinion or egos or background, we let the metrics make the vast majority of decisions,” says Gotthilf. “But there’s one big exception. Even if you’re an A/B fanatic, one thing trumps metrics: mission. Ours is to bring free language education to the world. That's something we take very seriously, and we’re tested every day. There are some ideas — like gating lessons behind a paywall — that we just don't test, because they fundamentally go against what we believe in. But anything in service of that mission, we’ll test. That’s our best bet in getting there.”

Illustration by Alejandro Garcia Ibanez. Photo courtesy of Duolingo.